What today’s healthcare AI reveals about who the system is really built for

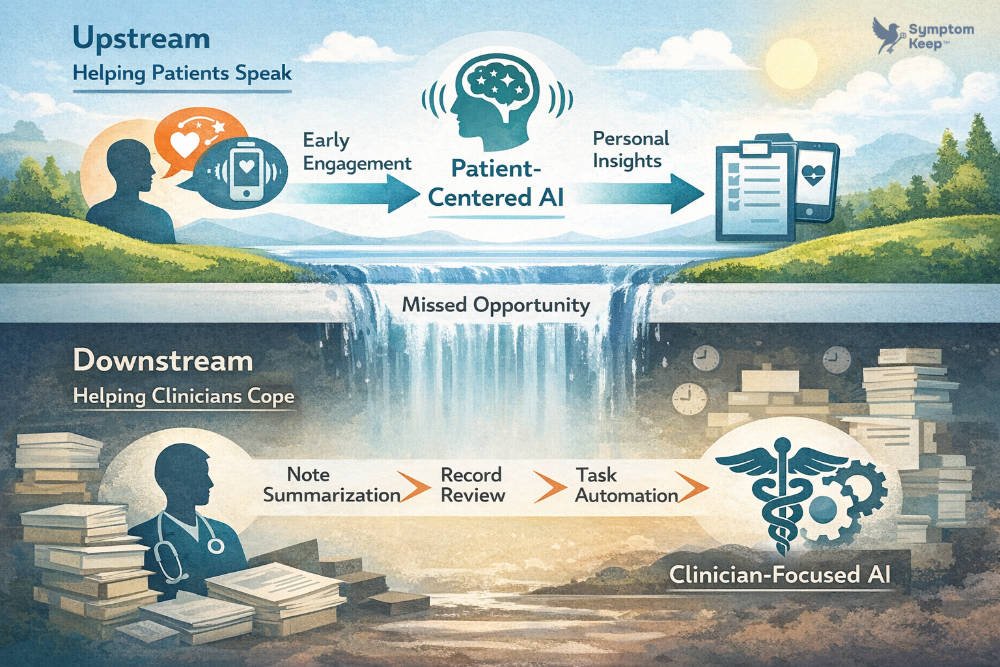

Over the past year, healthcare AI has made one thing increasingly clear. Most of it is being built to help clinicians cope.

- Cope with documentation overload.

- Cope with fragmented records.

- Cope with the reality of trying to care for too many patients in too little time.

Many of these tools are genuinely useful. Some are even impressive. But taken together, they reveal something quieter and more telling about where the industry is placing its attention. Instead of helping patients speak more clearly, earlier, and in their own words, we are using AI to manage the consequences of a system that still struggles to hear them.

Where healthcare AI is showing up

Recent clinician-facing tools, including products like Doximity’s DoxGPT and platforms such as OpenEvidence, reflect a consistent pattern. They are designed to reduce cognitive load once information has already entered the system.

- They summarize research.

- They help draft notes.

- They speed up decision-making inside existing workflows.

For clinicians operating under constant pressure, that kind of support matters. It’s not hard to understand why these tools are being adopted.

What’s notable is where they appear in the process. Almost all of this innovation happens after the patient encounter, not before it. AI is being applied where the system feels the most strain, not where the strain begins.

The moment before the chart opens

That distinction matters because the hardest part of care often isn’t documentation. It’s articulation.

When I began experiencing sudden dizziness and an elevated heart rate, I did what patients are instructed to do. I called my care team. The nursing staff wasn’t immediately available, and when they returned my call, I found myself trying to reconstruct an episode that had already passed.

I wasn’t just describing a feeling. I was trying to explain context. That my heart rate spiked after a nap, not exertion. That my Apple Watch showed numbers well outside my normal range. That the sensation was intense enough to make me feel like I might faint.

These details mattered. But they were difficult to convey in a short callback with someone managing a large caseload. Some of the nuance never made it into the record. Other parts were compressed into clinical shorthand.

The system captured data about me. Very little was captured from me.

What gets verified, and what gets missed

That pattern shows up in more routine care as well.

At nearly every visit, clinicians run through the same checklist. Current prescriptions. Supplements. Antihistamines. Beta blockers. Am I taking them? Has anything changed?

What’s rarely asked is how I’m actually experiencing those medications. Whether they’re helping. Whether side effects are tolerable. Whether something feels subtly wrong.

The process is efficient. It’s also impersonal. It confirms compliance without making much room for lived impact. That isn’t a failure of individual clinicians. It’s a system optimized to verify rather than to listen.

The knowledge that lives outside the system

Some of the most useful guidance I received during treatment didn’t come from a chart at all. It came from conversations in infusion rooms, passed quietly between patients, nurses, and caregivers.

One example still stands out. To help manage nausea and other chemo side effects, several people recommended a specific pairing: potato chips and Coca-Cola. The combination of salt and sugar, they said, helped settle the stomach or blunt the edge of the drugs.

I don’t drink soda anymore. I hadn’t eaten chips in years. But the advice was offered with confidence shaped by experience, not protocol.

Whether or not the mechanism was fully understood, the insight mattered. It was practical. It was human. And it existed entirely outside the system.

There was no place for it to live. No way to capture it, contextualize it, or return to it later. Like much of what patients learn along the way, it surfaced briefly and then disappeared.

What AI is helping with, and what it isn’t

This is where today’s healthcare AI feels incomplete.

Clinician-focused tools are designed to work with whatever information survives the journey into the chart. They help make sense of fragments. They help manage what remains.

What they don’t address is the fragile moment before that, when patients are still trying to understand what’s happening to them, let alone explain it clearly to someone else.

When patients struggle to articulate their experiences, clinicians are left working with partial signals. Applying AI at that point can help, but only within the limits of what was captured in the first place.

Why the industry keeps moving downstream

There are practical reasons this pattern persists.

Patient-facing systems introduce complexity. Privacy concerns. Liability questions. Emotional variability. Clinician-facing tools are more contained. Credentialed. Easier to govern and deploy. Easier to sell.

So innovation keeps flowing to the safest layer of the stack. The result is a steady improvement in how the system copes, without much progress in how it listens.

A different place to start

Clinician tools will continue to evolve, and they should. No one working inside today’s healthcare system would argue otherwise.

But coping isn’t the same as resolution. Speed isn’t the same as understanding.

If AI only enters the picture after a patient has already struggled to explain what they’re experiencing, then it’s reinforcing a familiar pattern. The system helps itself manage the consequences, while the root problem remains untouched.

The more difficult question is whether we’re willing to design for the moment before that. When the experience is still forming. When the details haven’t been flattened. When the patient still has something to say.

Leave a Reply